UniPDF with "GOMEMLIMIT" on Memory Intensive Application

Processing a lot of PDF documents in concurrency and having complex PDF contents can cause out of memory and crash your Go application, as Go(Golang) is garbage collected (“GC”) programming language, that’s was highly possible.

Starting Go 1.19, there’s a feature to set the soft memory limit, with GOMEMLIMIT feature it help you both increase GC-related performance as well as avoid GC-related out-of-memory (“OOM”) situations.

In this article, we will cover:

- How memory allocations work in Go.

- Why it was far too easy to run out of memory before Go 1.19.

- What

GOMEMLIMITis and why it can prevent premature OOM kills. - Run an experiment on UniPDF first without and then with

GOMEMLIMIT

How Memory Allocations Work in Go

What is a garbage-collected language?

In a garbage-collected language, such as Go, C#, or Java, the programmer doesn’t have to deallocate objects manually after using them. A GC cycle runs periodically to collect memory no longer needed and ensure it can be assigned again. Using a garbage-collected language is a trade-off between development complexity and execution time. Some CPU time has to be spent at runtime to run the GC cycles.

Go’s Garbage collector is highly concurrent and quite efficient. That makes Go a great compromise between both worlds. The syntax is straightforward, and the potential for memory-related bugs (such as memory leaks) is low. At the same time, it’s not uncommon to have Go programs spend considerably less than 1% of their CPU time on GC-related activities. Given how little the average program is optimized for execution efficiency, trading in just 1% of execution time is a pretty good deal. After all, who wants to worry about freeing memory after it’s been used?

However, as the next few paragraphs show, there are some caveats (and a terrific new solution called GOMEMLIMIT). If you aren’t careful, you can run OOM even when you shouldn’t. But before we dive into this, we need to talk about stack and heap allocations and why something ends up on the heap.

The stack vs. the heap

In short, there are two ways to allocate memory: On the stack or the heap. A stack allocation is short-lived and typically very cheap. No Garbage Collection is required for a stack allocation as the end of the function is also the end of the variable’s lifetime. On the other hand, a heap allocation is long-lived and considerably more expensive. When allocating onto the heap, the runtime must find a contiguous piece of memory where the new variable fits. Additionally, it must be garbage-collected when the variable is no longer used. Both operations are orders of magnitudes more expensive than a stack allocation. Let’s look at two simple examples. The first is a function that takes in two numbers, squares them, and returns the sum of the squares:

func SumSquares(a, b float64) float64 {

aSquared := a * a

bSquared := b * b

return aSquared + bSquared

}

Admittedly, this function is a bit more verbose than it would have to be. That is on purpose to show a lot of variables that can live on the stack. There are four variables (a, b, aSquared, and bSquared). None of them “escape” outside of this function block. As a result, the Go runtime can allocate them on the stack. In other words, these allocations are cheap. The garbage collector will never know about them. Now, let’s look at something that escapes onto the heap. An example application would be a cache. A cache is long-lived and needs to stick around – even after a specific function that interacts with the cache has returned. For example:

var cache = map[string]string{}

func Put(key, value string) {

cache[key] = value

}

In the above example, the cache variable is allocated on the heap. It exists before Put is called and after Put has returned. That is by no means the only criterion for why something escapes to the heap, but it should be enough for our purposes of understanding GC-related OOM situations.

When things accidentally escape to the heap

The previous examples have shown two distinct cases: Short-lived allocations which end on the stack and long-lived allocations which end up on the heap. In reality, it’s not always this simple. Sometimes you will end up with unintentional heap allocations. You allocate a variable that you believe should be short-lived, yet it is allocated on the heap anyway. Why and how that happens is a blog post on its own. It is one of my favorite topics about Go memory management, and I’d be happy to write this post. Please let me know. For this one, it’s enough to understand that sometimes heap-allocations happen even when we think they shouldn’t. That is important to know because those allocations will put pressure on the GC, which is required for an unexpected OOM situation.

GOMEMLIMIT

Go 1.19 introduced GOMEMLIMIT, which allows specifying a soft memory cap. It does not replace GOGC but works in conjunction with it.

You can set GOGC for a scenario in which memory is always available. And at the same time, you can trust that GOMEMLIMIT automatically makes the GC more aggressive when memory is scarce.

In other words, GOMEMLIMIT is precisely the missing piece that we outlined before.

If the live heap is low (e.g., 100MB), we can delay the next GC cycle until the heap has doubled (200MB). But if the heap has grown close to our limit, the GC will runs more often to prevent us from ever running OOM.

Why a soft limit? What is the difference between a soft and a hard limit?

The Go authors explicitly label GOMEMLIMIT a “soft” limit. That means that the Go runtime does not guarantee that the memory usage will exceed the limit. Instead, it uses it as a target.

The goal is to fail fast in an impossible-to-solve situation: Imagine we set the limit to a value just a few kilobytes larger than the live heap. The GC would have to run constantly.

We would be in a situation where the regular and GC execution would compete for the same resources. The application would stall. Since there is no way out – other than running with more memory – the Go runtime prefers an OOM situation. All the usable memory has been used up, and nothing can be freed anymore.

That is a failure scenario, and fast failure is preferred. That makes the limit a soft limit. Crossing it - and thus invoking an OOM kill from the kernel – is still an option, but only when the live heap is used up entirely, and there is nothing else that the runtime can do.

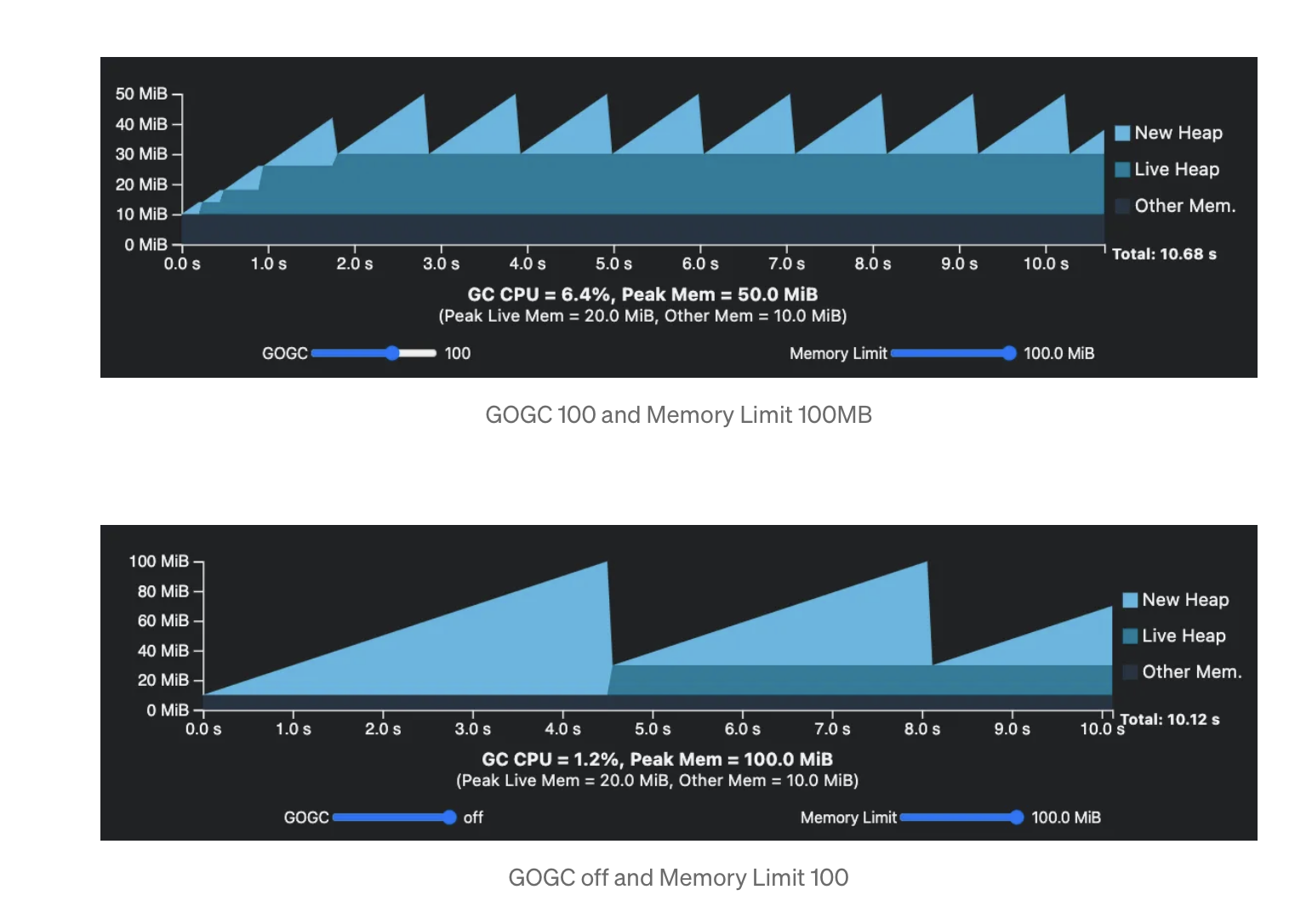

Here’s the comparison, how often the GC run when GOGC is set and turned off, at GOGC being set to 100, it runs on when memory reach to 50MiB, but at the GOGC off, GC run when it’s reach maximum memory available.

UniPDF with GOMEMLIMIT – With & Without

UniPDF is a Golang library that helps with PDF and document management tasks. It’s designed to be secure and can be used for a variety of tasks, including:

- Creating invoices: Create, customize, and edit invoices, and generate them from templates

- Merging PDF pages: Merge PDF pages in any order

- Protecting PDF files: Password protect report and invoice PDF files

- Extracting data: Extract essential elements like text, images, and tables from complex documents

- Creating PDF reports: Create PDF reports

- Creating table PDF reports: Create table PDF reports

At this part, we will run memory intensive process using UniPDF, the goal is to extract all images within a PDF file, then for each extracted images we add into UniPDF creator page. Our complete example for this guide can be seen here: https://github.com/sampila/unipdf-memory-limit

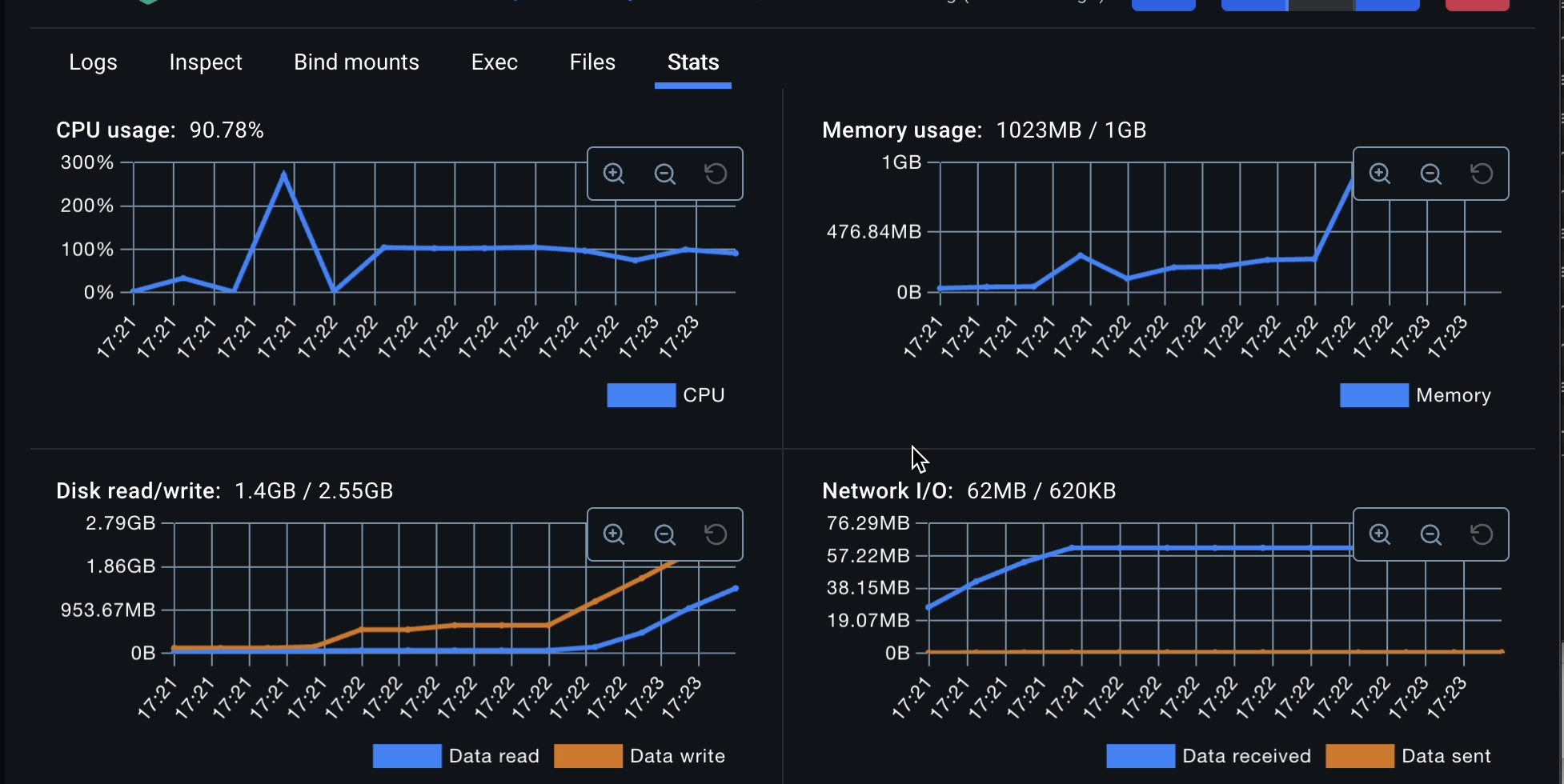

Without GOMEMLIMIT

For the first run, we have the following settings:

GOMEMLIMITis not set at allGOGCis set to 100.- Using Docker, the docker container memory limit is set to 1GiB.

Based on our rough estimations, this should use above 1GiB of live memory. Will we run out of memory? Here’s the command to start up a instance with the parameters described above:

docker run -m 1GiB\

--mount type=bind,source=${PWD},target=/app/\

-w /app/\

--entrypoint /bin/sh\

unipdf-memory-test\

run_test.sh

our run_test.sh file looks like:

#!/bin/bash

export GOGC=100

export GODEBUG=gctrace=1

export UNIDOC_LICENSE_API_KEY="UNICLOUD_LICENSE_API_KEY"

go mod init unipdf_memory_limit && go mod tidy

go get -u github.com/unidoc/unipdf/v3/...

go run main.go unipdf-large-pdf.pdf result-docker.pdf

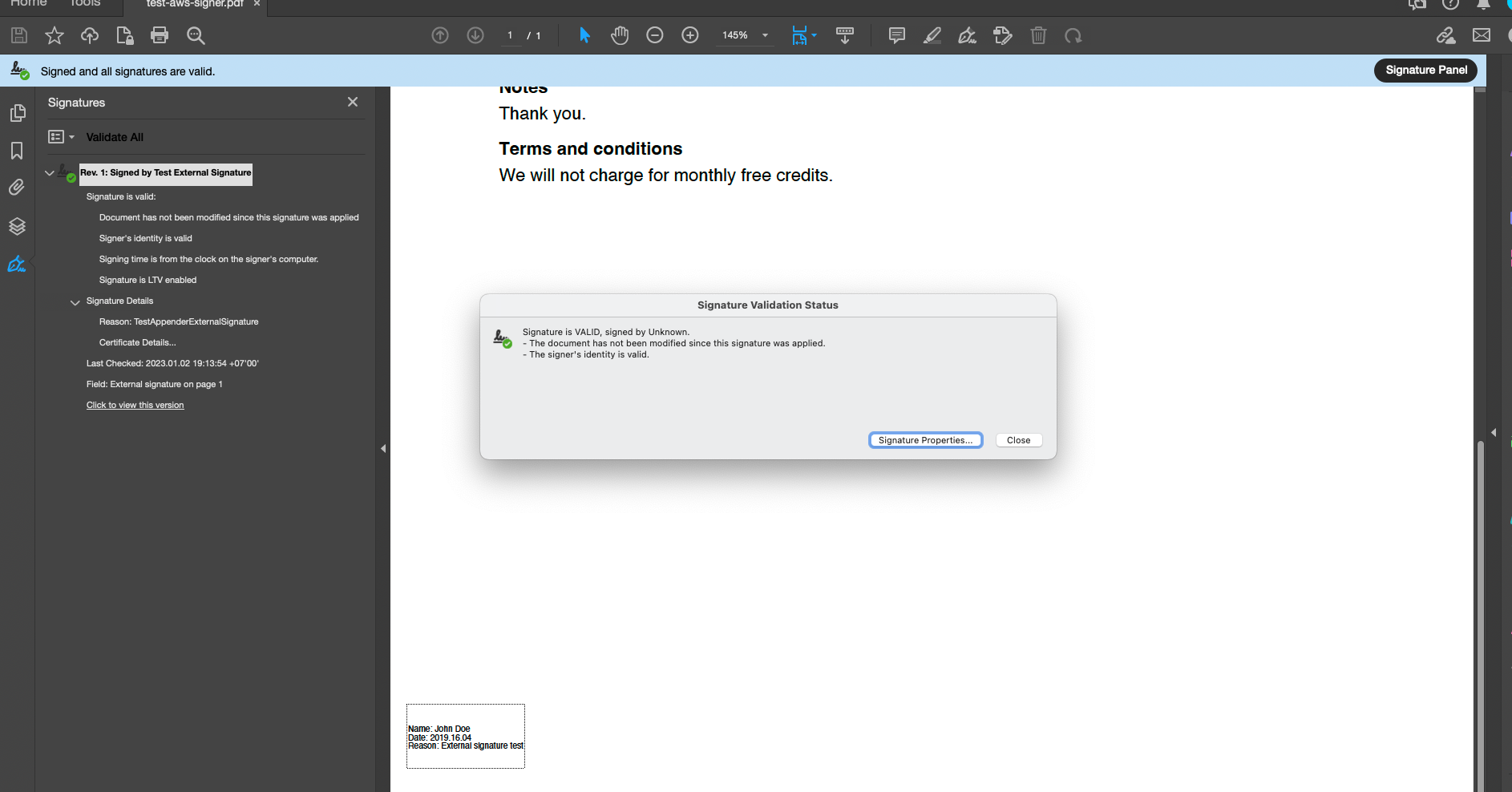

I set the GODEBUG=gctrace=1. That makes the GC more verbose. It will log every time it runs. Each log message prints both the current heap size and the new target. The process for extracting images goes well, until it goes into process of adding of each images into the unipdf creator page and as expected, the process is terminated due the out of memory.

Here’s the gc logs when processing the image into UniPDF creator page before application terminated by OOM

gc 248 @90.886s 0%: 0.046+1.4+0.032 ms clock, 0.37+0.070/0.61/0+0.26 ms cpu, 124->124->20 MB, 124 MB goal, 0 MB stacks, 0 MB globals, 8 P

gc 249 @91.139s 0%: 0.048+0.74+0.029 ms clock, 0.38+0.071/0.46/0.087+0.23 ms cpu, 51->51->51 MB, 51 MB goal, 0 MB stacks, 0 MB globals, 8 P

gc 250 @91.902s 0%: 0.042+1.6+0.039 ms clock, 0.34+0.075/0.54/0+0.31 ms cpu, 107->107->107 MB, 107 MB goal, 0 MB stacks, 0 MB globals, 8 P

gc 251 @93.106s 0%: 0.034+1.5+0.028 ms clock, 0.27+0.078/0.59/0.007+0.23 ms cpu, 215->215->215 MB, 215 MB goal, 0 MB stacks, 0 MB globals, 8 P

gc 252 @95.305s 0%: 0.037+1.5+0.037 ms clock, 0.29+0.091/0.57/0.003+0.30 ms cpu, 431->431->430 MB, 431 MB goal, 0 MB stacks, 0 MB globals, 8 P

gc 253 @99.174s 0%: 0.045+1.3+0.038 ms clock, 0.36+0.068/0.73/0.019+0.30 ms cpu, 861->861->859 MB, 861 MB goal, 0 MB stacks, 0 MB globals, 8 P

gc 254 @105.873s 0%: 3.1+7.6+0.028 ms clock, 24+2.3/11/10+0.23 ms cpu, 1716->1716->892 MB, 1720 MB goal, 0 MB stacks, 0 MB globals, 8 P

gc 255 @111.825s 0%: 2.2+6.3+0.028 ms clock, 18+4.9/11/1.5+0.22 ms cpu, 1780->1780->928 MB, 1784 MB goal, 0 MB stacks, 0 MB globals, 8 P

GC forced

gc 32 @128.356s 0%: 14+55+0.008 ms clock, 115+0/105/0+0.065 ms cpu, 5->5->4 MB, 8 MB goal, 0 MB stacks, 0 MB globals, 8 P

gc 256 @120.880s 0%: 1.2+11+0.022 ms clock, 10+4.5/18/22+0.18 ms cpu, 1854->1854->939 MB, 1858 MB goal, 0 MB stacks, 0 MB globals, 8 P

gc 257 @128.856s 0%: 2.9+10+0.046 ms clock, 23+0.48/20/20+0.37 ms cpu, 1904->1904->947 MB, 1904 MB goal, 0 MB stacks, 0 MB globals, 8 P

gc 258 @137.249s 0%: 4.0+13+0.021 ms clock, 32+3.5/23/43+0.16 ms cpu, 1891->1892->953 MB, 1895 MB goal, 0 MB stacks, 0 MB globals, 8 P

signal: killed

As you can see, just before the application is killed, it’s trying to free up memory, but it’s too late. The application is killed by the kernel because it’s out of memory.

With GOMEMLIMIT

I will run same process again, but now I set the GOMEMLIMIT value to “800MiB”, and modify the run_test.sh :

#!/bin/bash

export GOMEMLIMIT="800MiB"

export GOGC=100

export GODEBUG=gctrace=1

export UNIDOC_LICENSE_API_KEY="UNICLOUD_LICENSE_API_KEY"

go mod init unipdf_memory_limit && go mod tidy

go get -u github.com/unidoc/unipdf/v3/...

go run main.go unipdf-large-pdf.pdf result-docker.pdf

and when run the docker instance, it will works and finish with the pdf result file name result-docker.pdf, as the process is memory intensive, the memory usages still goes beyond the GOMEMLIMIT value, but the GC runs more intensively when usage already near 800MiB.

UniPDF Image Lazy Load with GOMEMLIMIT

To make the memory more efficient when using UniPDF and processing images, UniPDF had feature for lazy load image, the usage example can be seen at https://github.com/unidoc/unipdf-examples/blob/master/image/pdf_images_to_pdf_lazy.go.

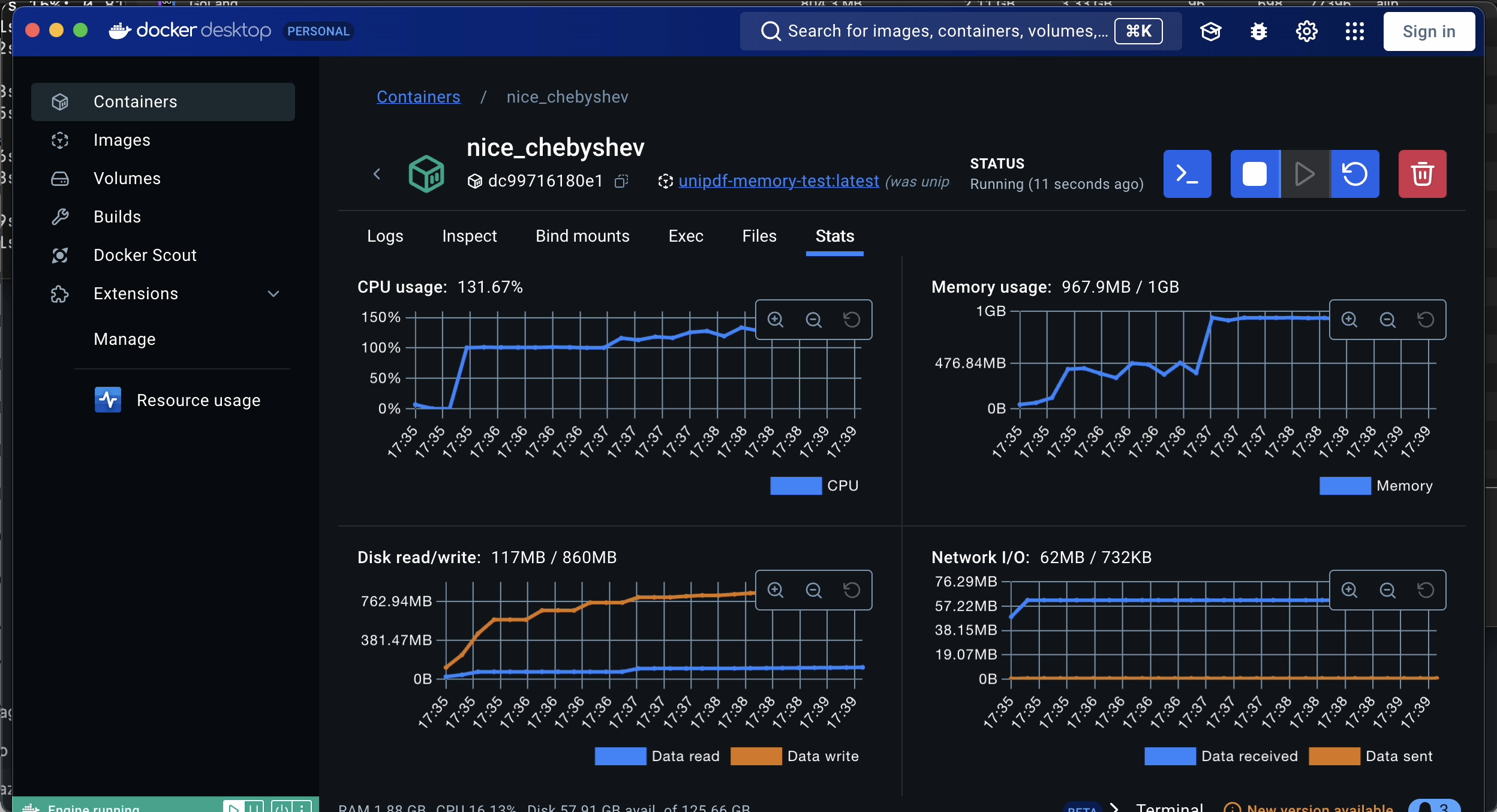

With enabling image lazy load, the image will be released from memory immediately after added into UniPDF creator page, given by that behavior, it will work in sync with GOMEMLIMIT, as shown on the stats picture below, for same process using image lazy load, the memory never hit into 1GiB at it’s peak.

How GOMEMLIMIT prevent from OOM?

The GOMEMLIMIT made the GC more aggressive when less memory was available but kept it pretty relaxed when there was plenty, but the trade-off is the CPU will be run more intensive.

Conclusion

- Before Go 1.19, the Go runtime could only set relative GC targets. That would make it very hard to use the available memory efficiently.

- With our experiments, we were able to prove that we could get our application to crash on a 1GiB machine while processing the and keeping a lot images on memory.

- After introducing GOMEMLIMIT=800MiB, our application no longer crashed and could efficiently use the available memory, the new PDF file from extracted image is created.

- Use image lazy load when possible if you are working with PDF that contains a lot of image processing.

A Go application that gets heavy usages on memory still has to ensure the memory allocation efficiency, setting GOMEMLIMIT can help you get the most out of your machines and prevents the OOM kills, however the trade-off is more CPU usage when your application is doing something that already memory intensive process.

Image how efficient will be your application when processing a lot of PDF files using UniPDF and setting the GOMEMLIMIT.